The Agentic Development Environment

The Agentic Development Environment

Where local, cloud, interactive, and autonomous agents come together, and developers ship faster without losing control.

Build with agents across the software development lifecycle

Build with agents across the software development lifecycle

Plan

Prototype

Build

Review + Merge

Validate

Deploy + Monitor

Weekly user feedback digest

Summarize customer feedback across channels and share a Monday morning roundup.

Scheduler: 8AM Mon

Slack Message

Ticket triage and prioritization

Group Linear tickets, propose owners and priority, and draft a short plan for each epic.

Linear integration

Artifact: Plan

PRD outline from new requests

Automatically turn inbound asks into scope, success criteria, and open questions the team can align on.

Artifact: PRD Draft

Artifact: Plan

Prototype end-to-end flows in an isolated sandbox

Explore multiple approaches without touching mainline code

Session links

Interation commits

Prototype branches

Prototype multiple approaches in parallel and compare tradeoffs

Build two or three versions (e.g., different architectures or libraries) and deliver a side-by-side evaluation

Refactor commits

Check runs

Realistic sample data and fixtures

Seed the sandbox with representative data and edge-case fixtures so the prototype behaves like production.

Seed script

Fixtures

Sample data

Ticket to PR implementation

Implement end-to-end on a clean branch and open a reviewable PR with a clear summary.

Ticket branch

PR Draft

Safe refactors with validation

Apply large mechanical refactors, run checks, and document risk and follow-ups in the PR

PR

Coordinated multi-repo changes

Make consistent updates across repos and open linked PRs for review

Multi-repo changes

PR

First-pass code review

Summarize intent, flag risks, and suggest improvements before humans dive in.

PR review comments

Suggested changes

Address review feedback

Automatically address requested changes and respond with clear rationale.

Iteration commits

Security and dependency scan

Check for vulnerabilities and risky dependencies and attach a short remediation plan.

Security scans

Remediation plan

Tests and CI confidence

Generate or update tests, run the suite, and iterate until everything is green.

Test generation

Check runs

End-to-end UI verification

Use computer use to validate key flows and produce a QA checklist with evidence

Computer use

QA checklist

Bug reproduction and validation

Test the changes against the original bug report

Computer use

Github issue

Release notes and rollout summary

Generate release notes and a rollout recap that teams can reuse.

Release notes

Rollout summary

Regression monitoring

Watch dashboards and alerts post-deploy and flag regressions early.

Dashboard watch

Regression alerts

Incident triage and mitigation

Collects logs and deploy context, then recommend a rollback or hotfix plan.

Incident context

Hotfix plan

Rapid iteration in isolated sandboxes

Explore multiple approaches without touching mainline code.

Prototype demos and documentation

Turn experiments into something you can share.

Feasibility checks and go / no-go signals

Validate assumptions before committing to a build.

Plan

Prototype

Build

Review + Merge

Validate

Deploy + Monitor

Weekly user feedback digest

Summarize customer feedback across channels and share a Monday morning roundup.

Scheduler: 8AM Mon

Slack Message

Ticket triage and prioritization

Group Linear tickets, propose owners and priority, and draft a short plan for each epic.

Linear integration

Artifact: Plan

PRD outline from new requests

Automatically turn inbound asks into scope, success criteria, and open questions the team can align on.

Artifact: PRD Draft

Artifact: Plan

Prototype end-to-end flows in an isolated sandbox

Explore multiple approaches without touching mainline code

Session links

Interation commits

Prototype branches

Prototype multiple approaches in parallel and compare tradeoffs

Build two or three versions (e.g., different architectures or libraries) and deliver a side-by-side evaluation

Refactor commits

Check runs

Realistic sample data and fixtures

Seed the sandbox with representative data and edge-case fixtures so the prototype behaves like production.

Seed script

Fixtures

Sample data

Ticket to PR implementation

Implement end-to-end on a clean branch and open a reviewable PR with a clear summary.

Ticket branch

PR Draft

Safe refactors with validation

Apply large mechanical refactors, run checks, and document risk and follow-ups in the PR

PR

Coordinated multi-repo changes

Make consistent updates across repos and open linked PRs for review

Multi-repo changes

PR

First-pass code review

Summarize intent, flag risks, and suggest improvements before humans dive in.

PR review comments

Suggested changes

Address review feedback

Automatically address requested changes and respond with clear rationale.

Iteration commits

Security and dependency scan

Check for vulnerabilities and risky dependencies and attach a short remediation plan.

Security scans

Remediation plan

Tests and CI confidence

Generate or update tests, run the suite, and iterate until everything is green.

Test generation

Check runs

End-to-end UI verification

Use computer use to validate key flows and produce a QA checklist with evidence

Computer use

QA checklist

Bug reproduction and validation

Test the changes against the original bug report

Computer use

Github issue

Release notes and rollout summary

Generate release notes and a rollout recap that teams can reuse.

Release notes

Rollout summary

Regression monitoring

Watch dashboards and alerts post-deploy and flag regressions early.

Dashboard watch

Regression alerts

Incident triage and mitigation

Collects logs and deploy context, then recommend a rollback or hotfix plan.

Incident context

Hotfix plan

Rapid iteration in isolated sandboxes

Explore multiple approaches without touching mainline code.

Prototype demos and documentation

Turn experiments into something you can share.

Feasibility checks and go / no-go signals

Validate assumptions before committing to a build.

Plan

Prototype

Build

Review + Merge

Validate

Deploy + Monitor

Weekly user feedback digest

Summarize customer feedback across channels and share a Monday morning roundup.

Scheduler: 8AM Mon

Slack Message

Ticket triage and prioritization

Group Linear tickets, propose owners and priority, and draft a short plan for each epic.

Linear integration

Artifact: Plan

PRD outline from new requests

Automatically turn inbound asks into scope, success criteria, and open questions the team can align on.

Artifact: PRD Draft

Artifact: Plan

Plan

Prototype

Build

Review + Merge

Validate

Deploy + Monitor

Weekly user feedback digest

Summarize customer feedback across channels and share a Monday morning roundup.

Scheduler: 8AM Mon

Slack Message

Ticket triage and prioritization

Group Linear tickets, propose owners and priority, and draft a short plan for each epic.

Linear integration

Artifact: Plan

PRD outline from new requests

Automatically turn inbound asks into scope, success criteria, and open questions the team can align on.

Artifact: PRD Draft

Artifact: Plan

Prototype end-to-end flows in an isolated sandbox

Explore multiple approaches without touching mainline code

Session links

Interation commits

Prototype branches

Prototype multiple approaches in parallel and compare tradeoffs

Build two or three versions (e.g., different architectures or libraries) and deliver a side-by-side evaluation

Refactor commits

Check runs

Realistic sample data and fixtures

Seed the sandbox with representative data and edge-case fixtures so the prototype behaves like production.

Seed script

Fixtures

Sample data

Ticket to PR implementation

Implement end-to-end on a clean branch and open a reviewable PR with a clear summary.

Ticket branch

PR Draft

Safe refactors with validation

Apply large mechanical refactors, run checks, and document risk and follow-ups in the PR

PR

Coordinated multi-repo changes

Make consistent updates across repos and open linked PRs for review

Multi-repo changes

PR

First-pass code review

Summarize intent, flag risks, and suggest improvements before humans dive in.

PR review comments

Suggested changes

Address review feedback

Automatically address requested changes and respond with clear rationale.

Iteration commits

Security and dependency scan

Check for vulnerabilities and risky dependencies and attach a short remediation plan.

Security scans

Remediation plan

Tests and CI confidence

Generate or update tests, run the suite, and iterate until everything is green.

Test generation

Check runs

End-to-end UI verification

Use computer use to validate key flows and produce a QA checklist with evidence

Computer use

QA checklist

Bug reproduction and validation

Test the changes against the original bug report

Computer use

Github issue

Release notes and rollout summary

Generate release notes and a rollout recap that teams can reuse.

Release notes

Rollout summary

Regression monitoring

Watch dashboards and alerts post-deploy and flag regressions early.

Dashboard watch

Regression alerts

Incident triage and mitigation

Collects logs and deploy context, then recommend a rollback or hotfix plan.

Incident context

Hotfix plan

Rapid iteration in isolated sandboxes

Explore multiple approaches without touching mainline code.

Prototype demos and documentation

Turn experiments into something you can share.

Feasibility checks and go / no-go signals

Validate assumptions before committing to a build.

Meet Oz

Meet Oz

Run, manage, and orchestrate coding agents at scale

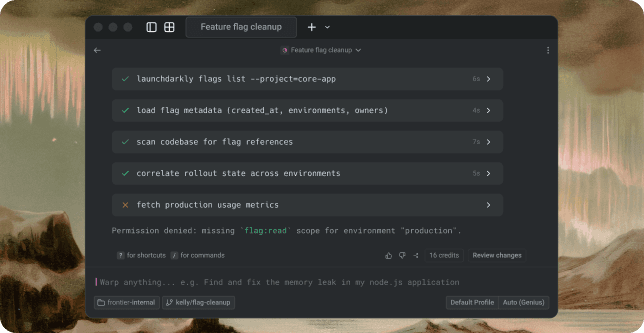

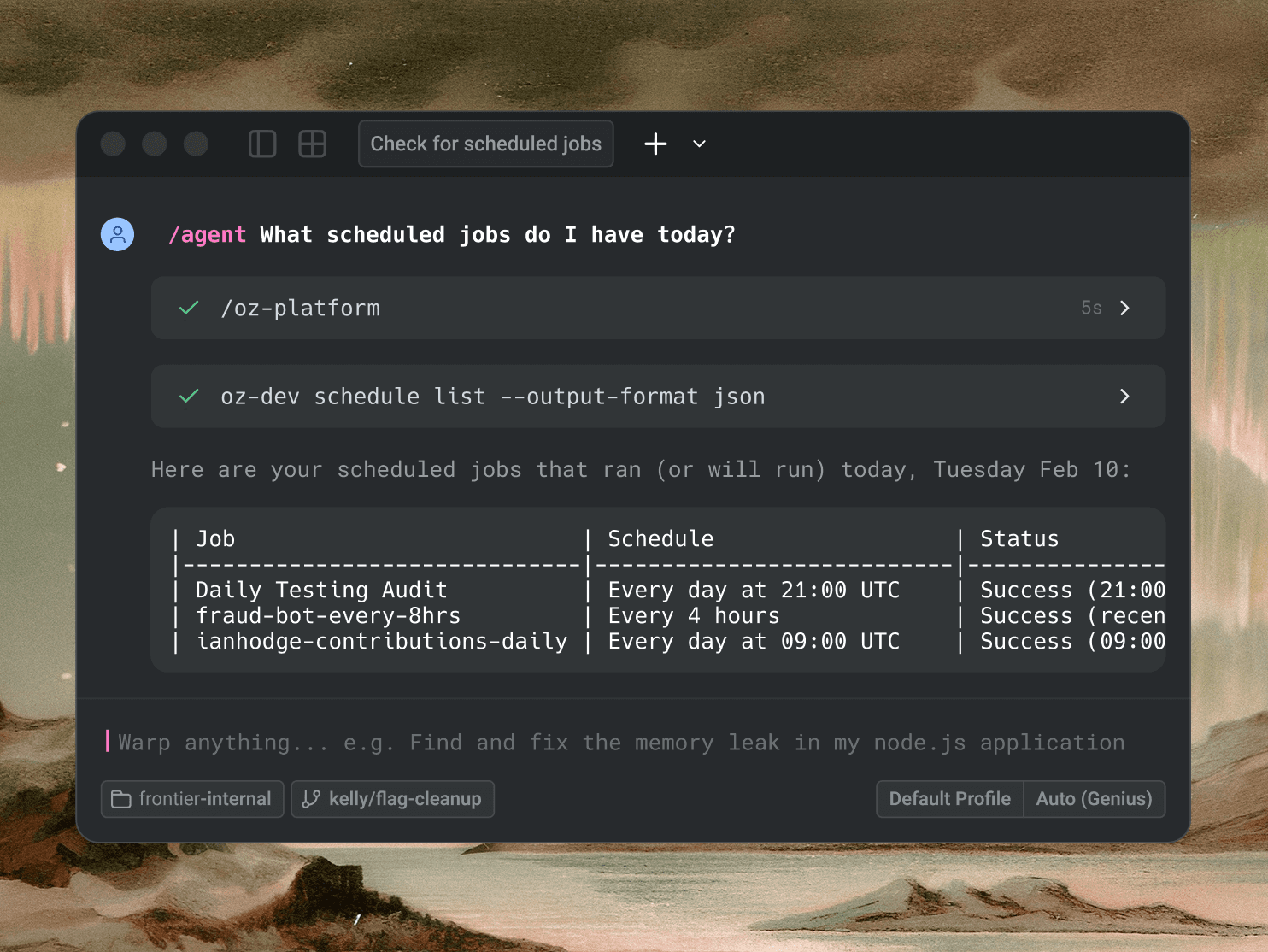

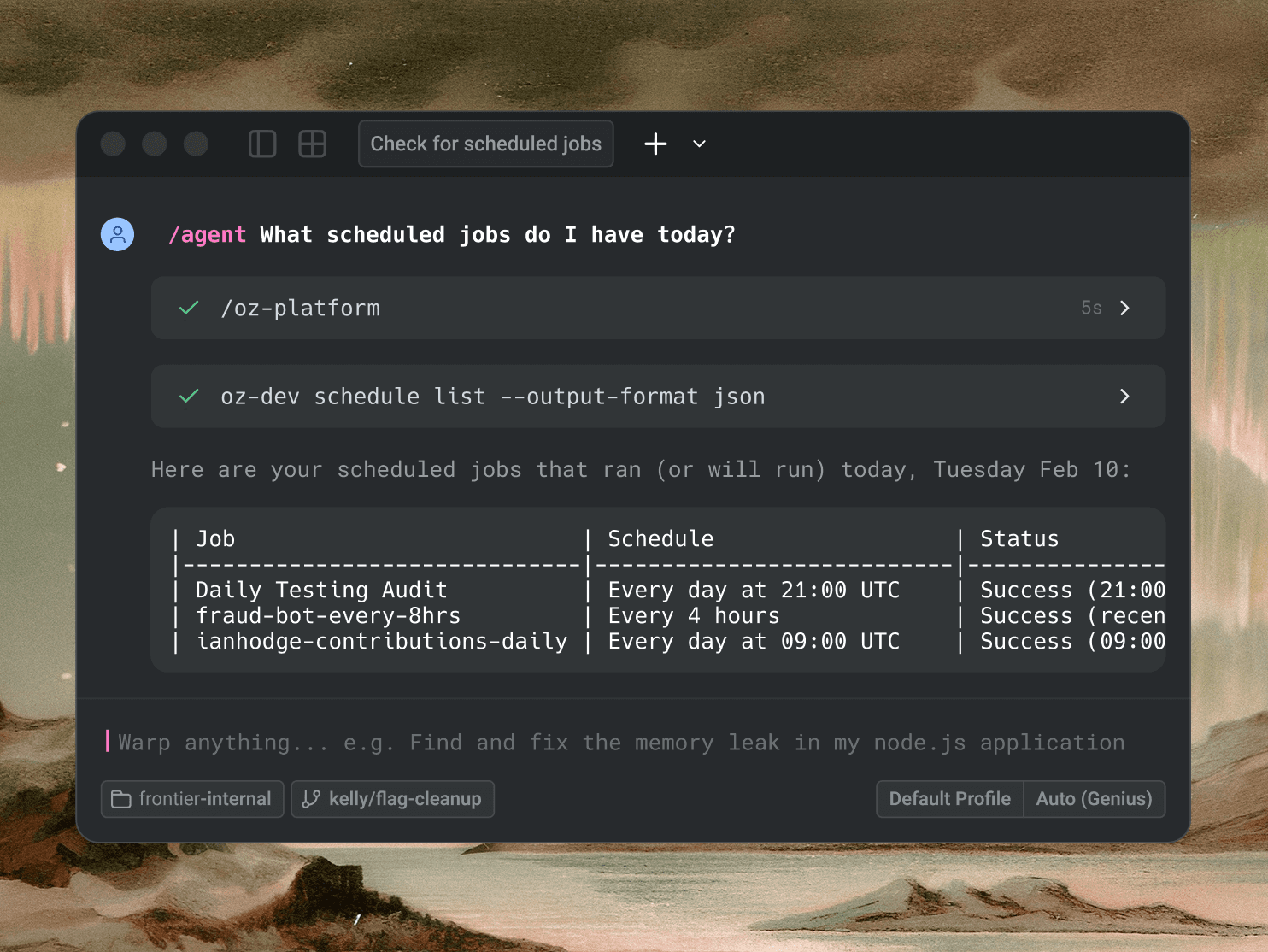

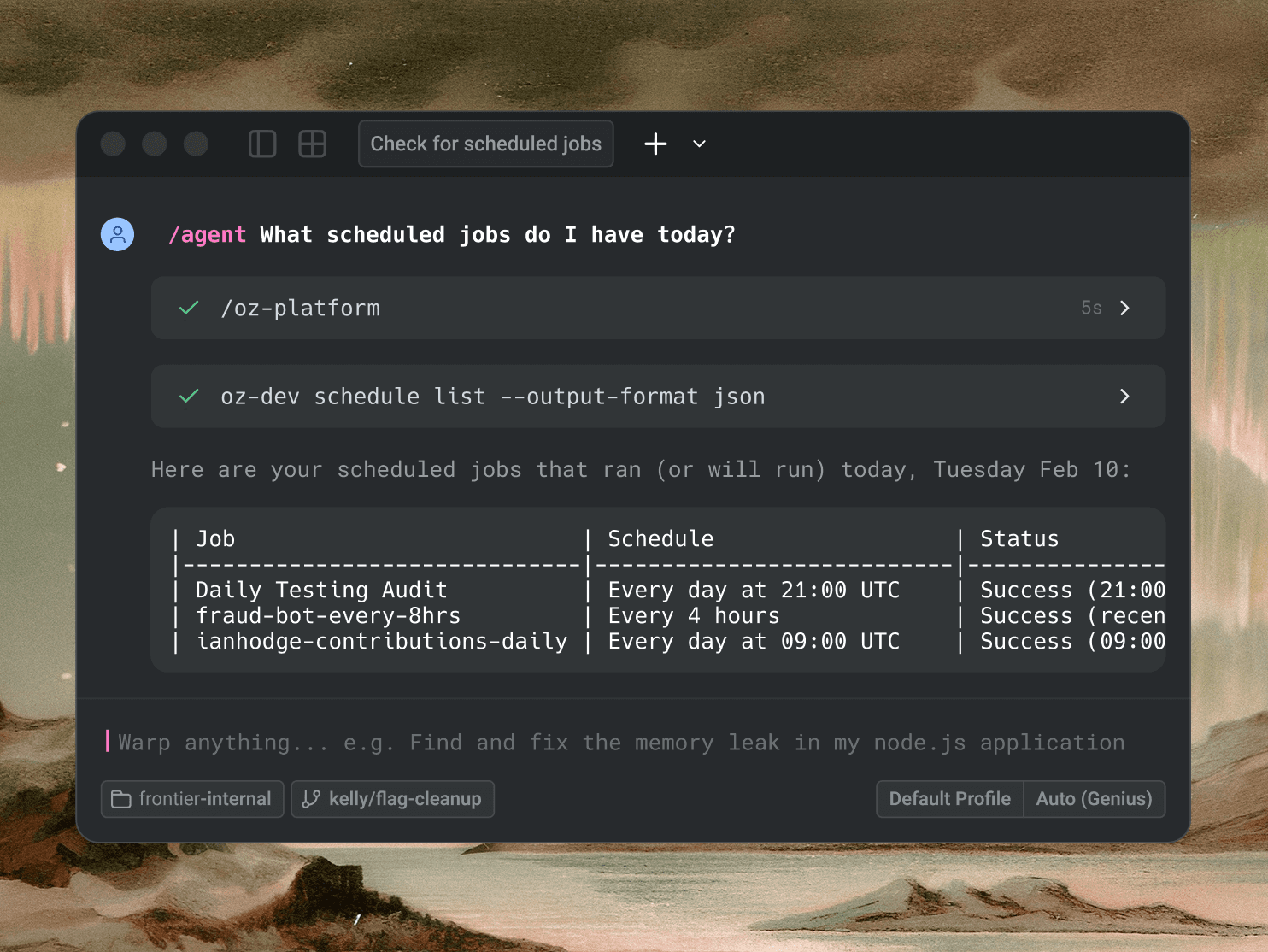

Turn agents into automations

Schedule agents to run like cron jobs and report back how youd like. Oz agents are based on Skills.

Learn more →

Turn agents into automations

Schedule agents to run like cron jobs and report back how youd like. Oz agents are based on Skills.

Learn more →

Turn agents into automations

Schedule agents to run like cron jobs and report back how youd like. Oz agents are based on Skills.

Learn more →

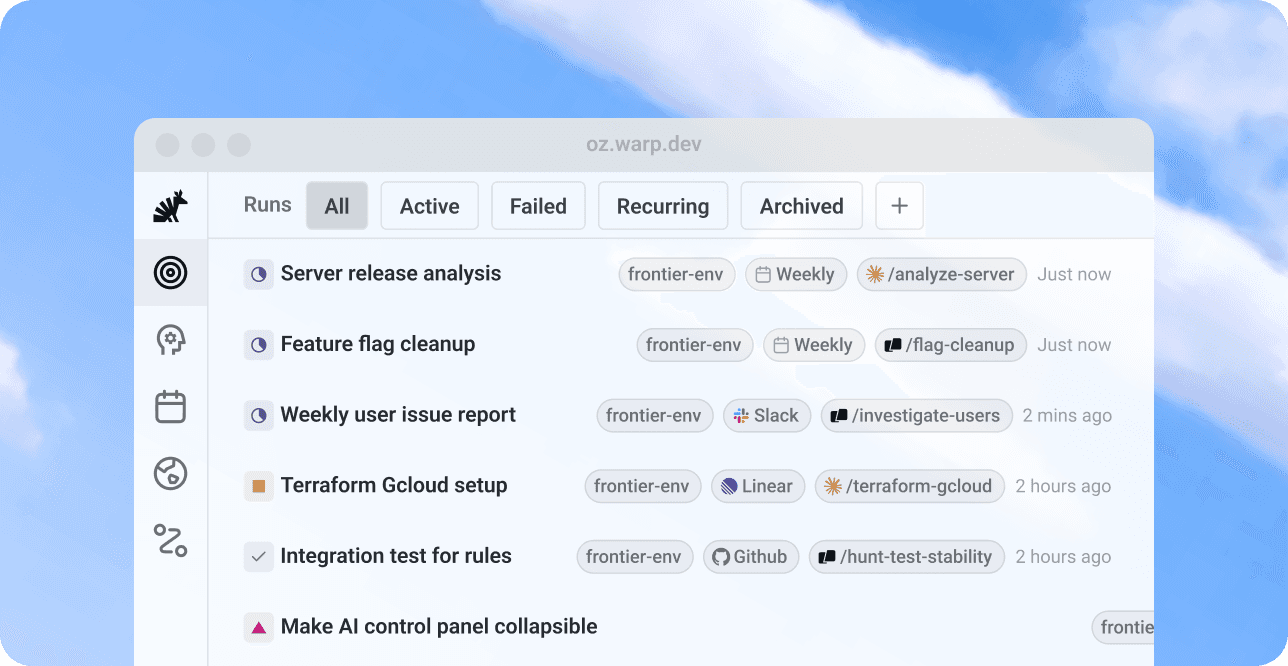

Unified control plane with best-in-class agent steerability

Start cloud agents from the Warp app, the web, or even your phone— and track them too. Join agent sessions with one click from the CLi, web, mobile, or Warp.

Learn more →

Unified control plane with best-in-class agent steerability

Start cloud agents from the Warp app, the web, or even your phone— and track them too. Join agent sessions with one click from the CLi, web, mobile, or Warp.

Learn more →

Unified control plane with best-in-class agent steerability

Start cloud agents from the Warp app, the web, or even your phone— and track them too. Join agent sessions with one click from the CLi, web, mobile, or Warp.

Learn more →

Turn agents into automations

Schedule agents to run like cron jobs and report back how youd like. Oz agents are based on Skills.

Learn more →

Unified control plane with best-in-class agent steerability

Start cloud agents from the Warp app, the web, or even your phone— and track them too. Join agent sessions with one click from the CLi, web, mobile, or Warp.

Learn more →

One agent, multi-repo changes

Work with your source code across repos and make sweeping, coordinated changes in one go

Learn more →

One agent, multi-repo changes

Work with your source code across repos and make sweeping, coordinated changes in one go

Learn more →

One agent, multi-repo changes

Work with your source code across repos and make sweeping, coordinated changes in one go

Learn more →

Multi-model and compatible with other Al coding tools

Oz comes with all the best models like Claude, Codex, and Gemini, and supports industry standards like Skills for quick onboarding.

Learn more →

Multi-model and compatible with other Al coding tools

Oz comes with all the best models like Claude, Codex, and Gemini, and supports industry standards like Skills for quick onboarding.

Learn more →

Multi-model and compatible with other Al coding tools

Oz comes with all the best models like Claude, Codex, and Gemini, and supports industry standards like Skills for quick onboarding.

Learn more →

One agent, multi-repo changes

Work with your source code across repos and make sweeping, coordinated changes in one go

Learn more →

Multi-model and compatible with other Al coding tools

Oz comes with all the best models like Claude, Codex, and Gemini, and supports industry standards like Skills for quick onboarding.

Learn more →

Warp is the best terminal for building with agents

Warp is the best terminal for building with agents

Warp is the best terminal for building with agents

Modern terminal with a built in code-editor, enabling teams to stay in the flow from starting a feature through shipping it.

Pick up agent tasks directly in Warp, where you can review code, re-prompt agents, and start new agent (or non-agent) tasks.

Modern terminal with a built in code-editor, enabling teams to stay in the flow from starting a feature through shipping it.

Pick up agent tasks directly in Warp, where you can review code, re-prompt agents, and start new agent (or non-agent) tasks.

Autonomous agents for enterprise

Autonomous agents for enterprise

Autonomous agents for enterprise

You've deployed Claude, Cursor, and Devin. Your team ships faster. So why are you still context-switching between 5 different agent workflows?

You've deployed Claude, Cursor, and Devin. Your team ships faster. So why are you still context-switching between 5 different agent workflows?

Designed for security. Built for organizations.

Designed for security. Built for organizations.

Enterprise-Grade controls

SAML-based SSO, role based access control for permission management

Enterprise-Grade controls

SAML-based SSO, role based access control for permission management

Enterprise-Grade controls

SAML-based SSO, role based access control for permission management

Enterprise-Grade controls

SAML-based SSO, role based access control for permission management

Your data, your control

SOC2 Type 2 compliance; industry leading data policies including zero-data retention

Your data, your control

SOC2 Type 2 compliance; industry leading data policies including zero-data retention

Your data, your control

SOC2 Type 2 compliance; industry leading data policies including zero-data retention

Your data, your control

SOC2 Type 2 compliance; industry leading data policies including zero-data retention

Privacy, even from agents

Secret redaction with custom regexes

Privacy, even from agents

Secret redaction with custom regexes

Privacy, even from agents

Secret redaction with custom regexes

Privacy, even from agents

Secret redaction with custom regexes

Flexible hosting and compute

BYOLLM support and flexible hosting so your code stays inside your infrastructure

Flexible hosting and compute

BYOLLM support and flexible hosting so your code stays inside your infrastructure

Flexible hosting and compute

BYOLLM support and flexible hosting so your code stays inside your infrastructure

Flexible hosting and compute

BYOLLM support and flexible hosting so your code stays inside your infrastructure

Centralized admin controls

Set agent permissions for your whole team; central usage reporting

Centralized admin controls

Set agent permissions for your whole team; central usage reporting

Centralized admin controls

Set agent permissions for your whole team; central usage reporting

Centralized admin controls

Set agent permissions for your whole team; central usage reporting

Password manager integrations

Read secrets directly from 1Password or LastPass

Password manager integrations

Read secrets directly from 1Password or LastPass

Password manager integrations

Read secrets directly from 1Password or LastPass

Password manager integrations

Read secrets directly from 1Password or LastPass

Actively Al runs shared terminal aliases in Warp, keeping workflows centralized and always up to date.

Actively Al runs shared terminal aliases in Warp, keeping workflows centralized and always up to date.

Actively Al runs shared terminal aliases in Warp, keeping workflows centralized and always up to date.

Actively Al runs shared terminal aliases in Warp, keeping workflows centralized and always up to date.

Docker uses Warp to reduce onboarding time and give every engineer a shared source of truth.

Docker uses Warp to reduce onboarding time and give every engineer a shared source of truth.

Docker uses Warp to reduce onboarding time and give every engineer a shared source of truth.

Docker uses Warp to reduce onboarding time and give every engineer a shared source of truth.

Warp feels like a limitless agentic tool. Once you wire up the right connectors, integrations, and skills, you can orchestrate multiple agents and automate almost anything you can imagine.

Warp feels like a limitless agentic tool. Once you wire up the right connectors, integrations, and skills, you can orchestrate multiple agents and automate almost anything you can imagine.

Warp feels like a limitless agentic tool. Once you wire up the right connectors, integrations, and skills, you can orchestrate multiple agents and automate almost anything you can imagine.

Al Software Engineer, International Airline Co (50,000+ FTE)

Oz agents maintain our docs autonomously. New engineers onboard faster because the docs are current. On-call engineers go from generic alert to remediation plan in minutes. We went from stale, thin documentation to ever-living documentation that serves both humans and agents.

Oz agents maintain our docs autonomously. New engineers onboard faster because the docs are current. On-call engineers go from generic alert to remediation plan in minutes. We went from stale, thin documentation to ever-living documentation that serves both humans and agents.

Oz agents maintain our docs autonomously. New engineers onboard faster because the docs are current. On-call engineers go from generic alert to remediation plan in minutes. We went from stale, thin documentation to ever-living documentation that serves both humans and agents.

Staff Software Engineer, IT Software Co (5,000+ FTE)

Oz for every engineering role

Oz for every engineering role

Frontend

Fullstack

DevOps

Data Science

Frontend

Watch Dave (Product Lead at Warp) audit his web application for accessibility using our built-in auditor Skill.

Fullstack

Watch Roland (Product Engineer at Warp) generate a fix to our platform across our server and client repositories in a single prompt... all while he's on a lunch break.

DevOps

Watch Ben (Lead DevRel at Warp) build a Sentry alert monitor to proactively fix bugs using Oz + the TypeScript SDK.

Data Science

Watch Ian (Data Scientist at Warp) use Oz to monitor customer data using BigQuery to send a scheduled insight summary to his team on Slack

Frontend

Fullstack

DevOps

Data Science

Frontend

Watch Dave (Product Lead at Warp) audit his web application for accessibility using our built-in auditor Skill.

Fullstack

Watch Roland (Product Engineer at Warp) generate a fix to our platform across our server and client repositories in a single prompt... all while he's on a lunch break.

DevOps

Watch Ben (Lead DevRel at Warp) build a Sentry alert monitor to proactively fix bugs using Oz + the TypeScript SDK.

Data Science

Watch Ian (Data Scientist at Warp) use Oz to monitor customer data using BigQuery to send a scheduled insight summary to his team on Slack

Frontend

Fullstack

DevOps

Data Science

Frontend

Watch Dave (Product Lead at Warp) audit his web application for accessibility using our built-in auditor Skill.

Fullstack

Watch Roland (Product Engineer at Warp) generate a fix to our platform across our server and client repositories in a single prompt... all while he's on a lunch break.

DevOps

Watch Ben (Lead DevRel at Warp) build a Sentry alert monitor to proactively fix bugs using Oz + the TypeScript SDK.

Data Science

Watch Ian (Data Scientist at Warp) use Oz to monitor customer data using BigQuery to send a scheduled insight summary to his team on Slack

Frontend

Watch Dave (Product Lead at Warp) audit his web application for accessibility using our built-in auditor Skill.

Frontend

Watch Dave (Product Lead at Warp) audit his web application for accessibility using our built-in auditor Skill.

Fullstack

Watch Roland (Product Engineer at Warp) generate a fix to our platform across our server and client repositories in a single prompt... all while he's on a lunch break.

Fullstack

Watch Roland (Product Engineer at Warp) generate a fix to our platform across our server and client repositories in a single prompt... all while he's on a lunch break.

DevOps

Watch Ben (Lead DevRel at Warp) build a Sentry alert monitor to proactively fix bugs using Oz + the TypeScript SDK.

DevOps

Watch Ben (Lead DevRel at Warp) build a Sentry alert monitor to proactively fix bugs using Oz + the TypeScript SDK.

Data Science

Watch Ian (Data Scientist at Warp) use Oz to monitor customer data using BigQuery to send a scheduled insight summary to his team on Slack

Data Science

Watch Ian (Data Scientist at Warp) use Oz to monitor customer data using BigQuery to send a scheduled insight summary to his team on Slack

Learn more

Learn more

Try Warp

Download Warp and see what developing in a modern terminal feels like. Use Oz agents natively in Warp

Make an account

Navigate to a repo

Start a coding task

Try Warp

Download Warp and see what developing in a modern terminal feels like. Use Oz agents natively in Warp

Make an account

Navigate to a repo

Start a coding task

Talk to our team

Use the Oz CLI, SDK, and API to launch local or cloud agents. Best for running parallel agents.

Request a demo

Learn about how Warp can help your specific organization

Get started with a trial

Talk to our team

Use the Oz CLI, SDK, and API to launch local or cloud agents. Best for running parallel agents.

Request a demo

Learn about how Warp can help your specific organization

Get started with a trial