In this post, I am going to outline what I believe are common misconceptions about AI-powered software development.

Misconception 1: AI Software Engineers are going to replace human engineers

This may happen eventually, but it’s not happening anytime soon.

Demos aside, the current iteration of LLMs are not smart enough to automate normal software development tasks (also, despite the cooler name, “agents” are just automation). Even for the most cultivated problem sets, an “AI Engineer” can only solve about 15-20% of the problems, and if you look closely at these problems they are extremely well specified and have clear validation criteria, making it easier for the AI to trial and error its way to success.

Problems in the real world don’t often come in this form. Looking at the open issues for Warp, all but perhaps a handful of trivial ones are not solvable today by an AI engineer; they have too much ambiguity, they need thought and product decisions, they are in complex parts of the codebase.

Moreover, even if AI Engineers get good at solving simple well-spec’d problems, that’s just going to leave more complex and interesting problems for humans to solve. There is effectively an infinite amount of software that the world needs built and for the foreseeable future we need humans to build it.

Misconception 2: LLMs are qualitatively different than other tools developers use

They certainly seem different in that the interaction is human like – you chat with them. And unlike other tools, they do things that are much more intelligent - they can understand and pattern match and reason and answer all sorts of questions. Moreover the marketing around “copilots” and “AI Engineers” is geared to make us look at AI as something more akin to a smart coworker than a hands-on tool.

I think this view is mistaken, at least for today’s models.

A few salient properties of tools compared to smart coworkers:

- Tools need operators. Coworkers don’t.

- The output of a tool varies directly with the skill and input of the user. This isn’t how it goes with your coworkers, but most definitely is how all useful AI development software works today.

- A tool works best with a tight feedback loop (more on this below).

Despite the marketing, all of the effective uses of LLMs to power development today require a human to prompt them, correct them, guide them, way beyond the level you would ever do this with a colleague. They are still tools.

Misconception 3: LLMs are harmful to development

On the other side of the spectrum, there is a class of traditionalist developers that dismisses AI in development as somehow harmful. I’ve seen articles on how AI leads to people shipping poorly factored, buggy, repeated code. How it encourages developers not to learn traditional tools, and so on.

This view is equally wrong to that of the people hyping AI as an intelligent coworker, but in the opposite direction.

AI as a tool is ridiculously powerful – just watch some of the videos of me building stuff in Agent Mode in a tenth of the time it would have otherwise taken me.

But the key thing to realize is that I was only able to do this because I used the AI in a certain way. The AI made a lot of mistakes as I was trying to accomplish the tasks I set out to do, but because I’m a pretty experienced engineer I was able to spot those mistakes and correct them. I was able to prompt the AI in a way that made it more likely to succeed. I was able to spot check its work. I knew how to get it to remember things and recognize patterns.

All this is to say: I had to use it like I would any powerful tool to get the result that I wanted.

And of course, if I didn’t know what I was doing and blindly trusted the AI to do stuff for me, that could have led to bad results.

But that’s on the engineer operating the tool, not the tool itself.

Misconception 4: The best way to use AI Agents is to put them in a sandbox and wait for a result

This is intuitively appealing: “I’ll have a margarita while an AI engineer messes around trying to code for me.” But unfortunately if you go this route I think you’re going to spend a ton of money on inference waiting for generally poor outcomes right now.

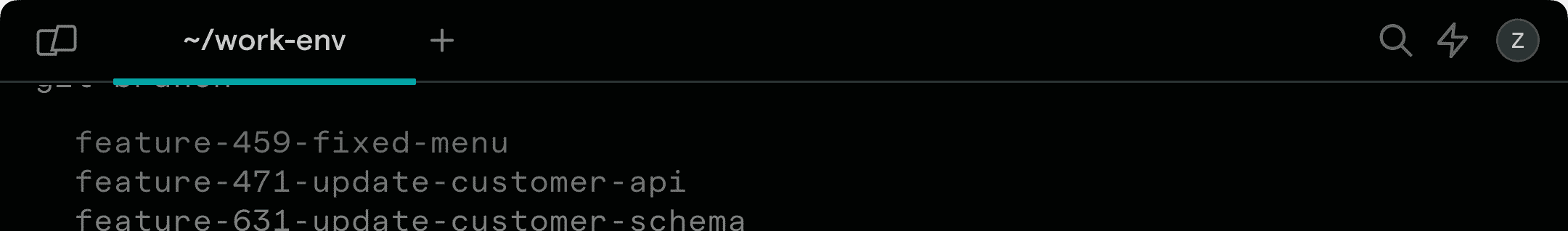

I believe the best experience at the moment is the exact opposite: AI should have a tight feedback loop and work in your existing context under close supervision.

Using AI is an iterative process, in the same way that writing code and fixing errors is, so the faster a human can prompt the AI, adjust its result, reprompt, and so on, the faster you get to a good result (see my post on Ask & Adjust for more on how humans and AI work best together).

This property is true of pretty much every developer tool by the way: iteration speed (fast builds, fast server restarts, etc) drives productivity.

Misconception 5: AI is making development less interesting or fun

This one is subjective, but to me it’s just the opposite.

The parts of development that I like the least are the ones that AI is best at tackling right now. AI can fix configuration, save me from writing boilerplate code, and help me navigate complex documentation. It does the drudge work and lets me do the harder thinking, the architecture, the puzzle solving. It lets me ship better products, more quickly, which is what I actually care about.

I found this especially true with the AI feature we just launched, Agent Mode. As I learned how to use it, it became more and more empowering to work closely with it; it made me feel way more capable as an engineer; the dopamine release from going back and forth with it was almost like I was playing a video game.

I don’t expect everyone to have this experience today – it’s a controversial take I guess. But in the long run I strongly believe the best engineers will want to use AI as much as possible for the shitty parts of their job so they can focus on the fun stuff.